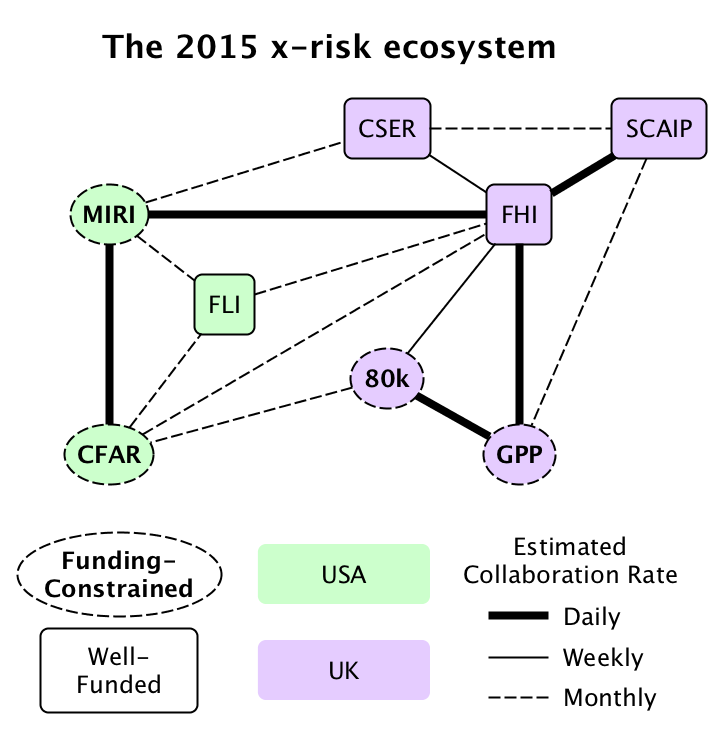

Summary: Because of its plans to increase collaboration and run training/recruiting programs for other groups, CFAR currently looks to me like the most valuable pathway per-dollar-donated for reducing x-risk, followed closely by MIRI, and GPP+80k. As well, MIRI looks like the most valuable place for new researchers (funding permitting; see this post), followed very closely by FHI, and CSER.

After leaving my job as an algorithmic stock trader earlier this year, I spent 7 weeks traveling between, Oxford, Cambridge, and Berkeley, meeting folks from:

(1) FHI – The Oxford Future of Humanity Institute

(2) CSER – The Cambridge Centre for the Study of Existential Risk

(3) FLI – The Future of Life Institute

(4) MIRI – The Machine Intelligence Research Institute

(5) CFAR – The Center for Applied Rationality

(6) CEA – The Centre for Effective Altruism, and specifically its sub-projects,

(6.1) 80k – 80,000 Hours and

(6.2) GPP – The Global Priorities Project, and

(7) SCAIP – The Oxford Strategic Center for Artificial Intelligence Policy.

I wanted to assess where funding (where I want to donate) and human capital (where I want to work) were most valuable for reducing existential risk, or “x-risk” — the most plausible threats that would cause complete human extinction.

While many groups around the world have researched global catastrophic risk — threats that might negatively affect a large proportion of the human population — very few organizations are focussed on existential risk. And because I think they’re actually making headway, I consider all of the above groups to be more valuable per-dollar-donated than even GiveWell’s top charities, because of the current tractability, neglectedness, and massive long-term importance of x-risk. So, if you’re inclined to donate to any one of them, but disagree with my comparison between them, just go for your favorite, as they are all super awesome in my opinion.

What they do:

In broad brush strokes,

- 1-4 are doing or promoting essential scientific/technical research into how to reduce x-risk, and upon my personal interrogation of many of the parties involved, I consider their research to be of high quality and significance, with many novel and important insights into existential risk and how we might reduce it;

- 5-6.1 are focussing a large part of their attention on helping organizations like 1-4 to accrue the human capital they need to succeed; and

- 6.2-7 are focussed on policy-level x-risk interventions that might follow from the essential x-risk research conducted by groups like 1-4.

- 6.2-7 are focussed on policy-level x-risk interventions that might follow from the essential x-risk research conducted by groups like 1-4.

I have gone about meeting these groups in person to assess their competence, have seen their work first-hand, and consider them all to be doing good work.

How they’re doing:

1-3 (FHI, CSER, FLI) are currently very well funded, with each receiving 5-10 million in grants this year, and are now bottlenecked on hiring and training rather than funding.

4 (MIRI) is only moderately well funded by private donations with annual budget of around 1.5 million, but is doing quite well with hiring: it has more researchers willing and ready to join the team, making it a ripe opportunity for funding. MIRI is therefore my second favorite for donations this year. It’s also where I have chosen to work myself, for related reasons.

5 (CFAR) is poorly funded, with an annual budget of only around 0.5 million, but it has nonetheless been helpful in creating an alumni network who founded 3, and in running recruiting and training programs for 4, by deploying workshops with highly-related “applied rationality” curricula developed over 2011-2015 via testing hundreds of different classes on hundreds of different paid and unpaid participants. Since 5 (CFAR) aims to focus more on recruiting and training for x-risk groups like 1-3 in 2016, and has been successful in this endeavor for 4, it is my favorite for donations this year.

6.1 (80k) seems to me to have been very helpful in causing many new people to consider x-risk as a career-worthy concern. I think it is solving a similar problem for x-risk as 5 but at an earlier stage of the recruitment pipeline, and therefore 80,000 Hours ties as my third favorite for donations this year. Update: 80k has reached its funding goal for this year, whereas GPP has not, so I would prioritize GPP first.

6.2 (GPP) and 7 (SCAIP) are beginning to address policy questions, which depend on a healthy ecosystem of actual x-risk researchers to query, so I think they will be much more valuable after the problem of hiring and training of more researchers by groups like 1-4 has been addressed, perhaps with help from 5 and 6.1. Since 7 (SCAIP) is already well-funded by grant programs, 6.2 (GPP) is my third favorite for donations this year.

If you need more help choosing where to donate:

If you end up feeling like you are choosing between 6.1 (GPP) and 6.2 (80k), I would highly recommend you contact both groups and ask them their opinion to help you choose.

Similarly, I would encourage anyone to contact either of my top two recommendations 4 (MIRI) and 5 (CFAR) if you felt you were choosing between them.

It seems harder for 4 and 5 to evaluate 6.1 and 6.2, or conversely, since geography makes those edges of the collaboration graph somewhat weaker. Since I’ve personally worked more with 4 and 5, I would not at all be shocked to find I was wrong in my comparisons across those weaker edges.

As well, I think the fact that I have held some influence with 4 and 5 over the years has caused some strategic alignment in those organizations with what I think is likely to work, and conversely.

So, more than anything else, I think donations to CFAR, MIRI, GPP, and 80k are all extremely good deals-per-dollar for reducing existential risk!

Groups notably excluded from this post:

- OpenAI has over 1 billion in funding pledged, but plans for it were only announced a month ago, so it sort of doesn’t exist yet and hasn’t produced any research. But stay tuned!

- CFI — The Cambridge/Leverhulme Centre for the Future of Intelligence — it doesn’t quite exist yet, and so I can’t talk about what kind of research it “does”. It already has 10 million pounds of funding, though, and if all goes according to plan, it will be a research institute with strong connections to CSER.

- GCRI — The Global Catastrophic Risks Institute — has very interesting and relevant publications, is are geographically decentralized and so they have no office for me to have visited. I would probably like to see them working physically closer together, but can’t pass much judgement yet as I haven’t interacted with them much.

- AI Impacts, currently comprising Paul Christano and Katja Grace, does top-quality research for AI safety in close collaboration with MIRI and FHI. They are not yet at a scale I would call an “organization”, but they will almost surely have my full support if they put forth plans to grow.

- OpenPhil — the Open Philanthropy Project, have given 1.8 million in grant funding via FLI — and intend to continue investigating and supporting x-risk research, among many other non-x-risk causes. Stay tuned.

- REG — Raising For Effective Giving — have a category of meta charities they recommend funding, including CFAR and MIRI, and various other other causes unrelated to x-risk. I don’t know them very well personally and so I can’t speak to whether they plan to increase or decrease their focus on x-risk, but I’m very glad for their support so far.