From an outside view, looking in at the Earth, if you noticed that human beings were about to replace themselves as the most intelligent agents on the planet, would you think it unreasonable if 1% of their effort were being spent explicitly reasoning about that transition? How about 0.1%?

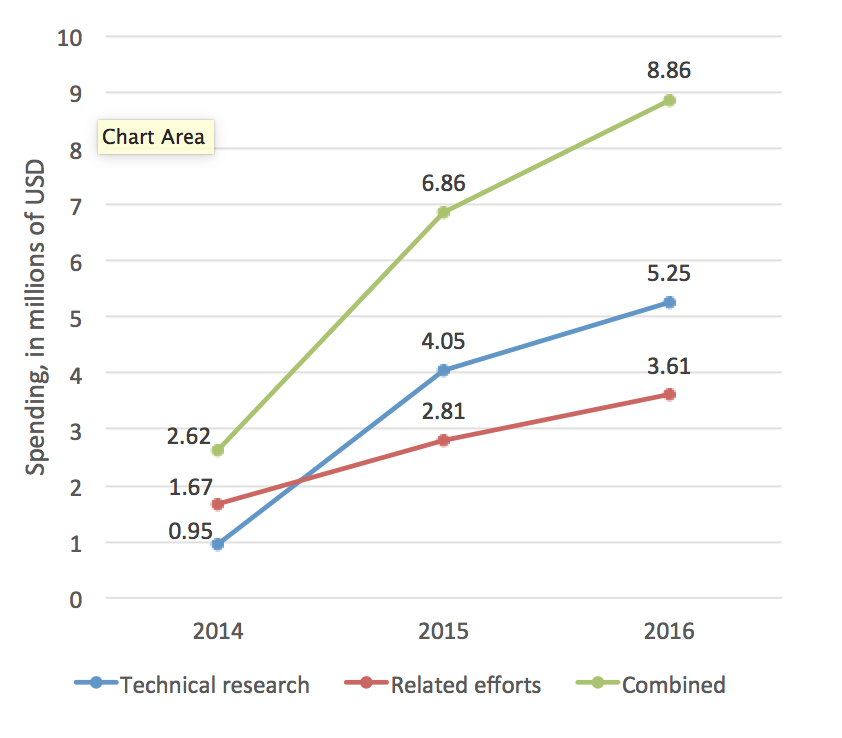

Well, currently, world GDP is around $75 trillion, and in total, our species is spending around $9MM/year on alignment research in preparation for human-level AI (HLAI). That’s $5MM on technical research distributed across 24 projects with a median annual budget of $100k, and 4MM on related efforts, like recruitment and qualitative studies like this blog post, distributed across 20 projects with a median annual budget of $57k. (I computed these numbers by tallying spending from a database I borrowed from Sebastian Farquhar at the Global Priorities Project, which uses a much more liberal definition of “alignment research” than I do.) I predict spending will roughly at least double in the next 1-2 years, and frankly, am underwhelmed…

There are good signs, of course. For example, the MIT Media Lab and the Berkman Klein Center for Internet and Society at Harvard University will serve as the founding anchor institutions for a new initiative aimed at bridging the gap between the humanities, the social sciences, and computing by addressing the global challenges of artificial intelligence (AI) from a multidisciplinary perspective.

This is not HLAI alignment research. But it’s an awesome step in the right direction. *But* it’s nowhere near what’s needed. Why?

The lag time from major successes in Deep Learning to generally-socially-concerned research funding like that of the Media Lab / Klein Center has been several years, depending on how you count. We need that reaction time to get shorter and shorter until our civilization becomes proactive, so that our civilizational capability to align and control superintelligent machines exists before the machines themselves do. I suspect this might require spending more than 0.00001% of world GDP on alignment research for human-level and super-human AI.

Granted, the transition to spending a reasonable level of species-scale effort on this problem is not trivial, and I’m not saying the solution is not to undirectedly throw money at it. But I am saying that we are nowhere near done the on-ramp to acting even remotely sanely, as a societal scale, in anticipation of HLAI. And as long as we’re not there, the people who know this need to keep saying it.