Search

Speaking engagements

There are no upcoming events.

Effective Altruism events

There are no upcoming events.

Math events

There are no upcoming events.

Rationality events

There are no upcoming events.

Meta

Posted in Effectiveness

I get a lot of email, and unfortunately, template email responses are not yet integrated into the mobile version of Google inbox. So, until then, please forgive me if I send you this page as a response! Hopefully it is better than no response at all.

Thanks for being understanding.

Continue readingPosted in AI Safety, Effectiveness, Life

Summary: This is a tutorial on how to properly acknowledge that your decision heuristics are not local to your own brain, and that as a result, it is sometimes normatively rational for you to act in ways that are deserving of trust, for no other reason other than to have deserved that trust in the past.

Related posts: I wrote about this 6 years ago on LessWrong (“Newcomb’s problem happened to me”), and last year Paul Christiano also gave numerous consequentialist considerations in favor of integrity (“Integrity for consequentialists”) that included this one. But since I think now is an especially important time for members of society to continue honoring agreements and mutual trust, I’m giving this another go. I was somewhat obsessed with Newcomb’s problem in high school, and have been milking insights from it ever since. I really think folks would do well to actually grok it fully.

You know that icky feeling you get when you realize you almost just fell prey to the sunk cost fallacy, and are now embarrassed at yourself for trying to fix the past by sabotaging the present? Let’s call this instinct “don’t sabotage the present for the past”. It’s generally very useful.

However, sometimes the usually-helpful “don’t sabotage the present for the past” instinct can also lead people to betray one another when there will be no reputational costs for doing so. I claim that not only is this immoral, but even more fundamentally, it is sometimes a logical fallacy. Specifically, whenever someone reasons about you and decides to trust you, you wind up in a fuzzy version of Newcomb’s problem where it may be rational for you to behave somewhat as though your present actions are feeding into their past reasoning process. This seems like a weird claim to make, but that’s exactly why I’m writing this post.

Continue readingPosted in Altruism, Effectiveness, Life

Dear liberal American friends: please pair readings of liberal media with viewings of Fox news or other conservative media on the same topics. This will take work. They will say things you disagree with, using words you are unfamiliar with. You’ll have to stop scrolling down on Facebook and actively google phrases like “Trump executive order to protect America.” That may sound hard, but the integrity of your country depends on you doing it.

You’ve probably heard about the President’s executive order restricting immigration from seven countries, which lead to the mistreatment of legal visa holders and permanent residents of the United States in Airports. You probably also understand that there is a huge difference between ruling out new visas from those countries, and dishonoring existing ones. The latter is breaking a promise. Dishonoring agreements like that makes you untrustworthy, and that is very bad for cooperation. Right?

Well, hear this. Continue reading

Posted in Altruism, Effectiveness

From an outside view, looking in at the Earth, if you noticed that human beings were about to replace themselves as the most intelligent agents on the planet, would you think it unreasonable if 1% of their effort were being spent explicitly reasoning about that transition? How about 0.1%?

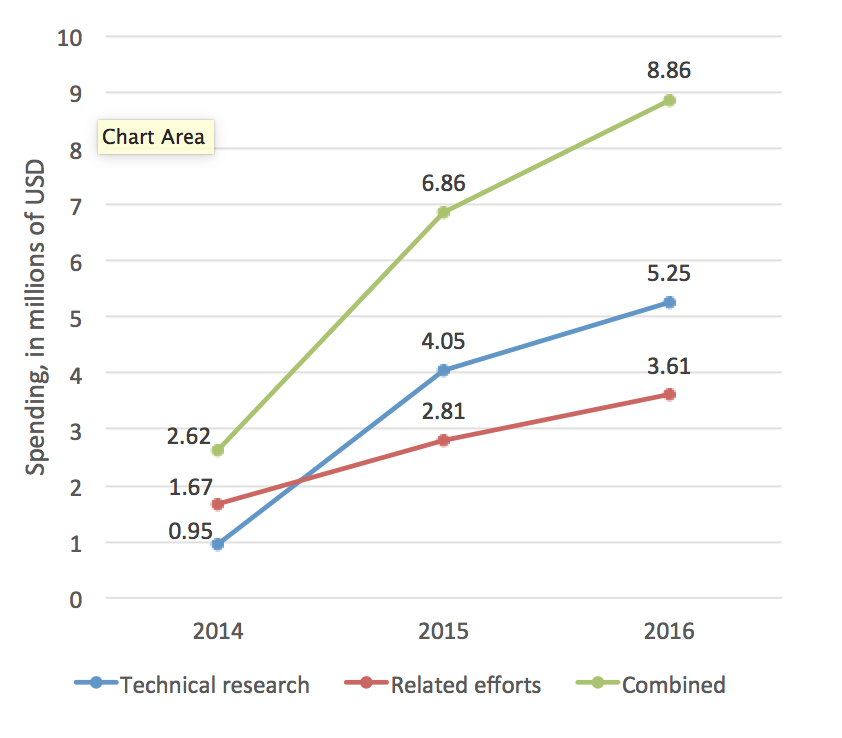

Well, currently, world GDP is around \$75 trillion, and in total, our species is spending around \$9MM/year on alignment research in preparation for human-level AI (HLAI). That’s \$5MM on technical research distributed across 24 projects with a median annual budget of \$100k, and 4MM on related efforts, like recruitment and qualitative studies like this blog post, distributed across 20 projects with a median annual budget of \$57k. (I computed these numbers by tallying spending from a database I borrowed from Sebastian Farquhar at the Global Priorities Project, which uses a much more liberal definition of “alignment research” than I do.) I predict spending will roughly at least double in the next 1-2 years, and frankly, am underwhelmed…

Posted in AI Safety, Altruism, Effectiveness

I think donating to charity is great, especially if you make more than \$100k per year, placing you well past the threshold where your well-being depends heavily on income (somewhere around \$70k, depending on who does the analysis). I’ve been in that boat before, and donated more than 100% of my disposable income to charity. However, I was also particularly well-positioned to know where money should go at that time, which made donating particularly worth doing. I haven’t made any kind of official pledge to always donate money, because I take pledges/promises very seriously, and for me personally, taking such a pledge seems like a bad idea, even accounting for its signalling value. I’m writing this blog post mainly as a way to reduce social pressure among such folks who earn less than \$100k per year to produce donations, while at the same time encouraging folks who earn more to consider donating more seriously.

Continue readingPosted in Altruism, Effectiveness

(Share this post to encourage folks with rational, altruistic leanings to vote more. I originally posted this to LessWrong in 2012, but I figured it was worth re-posting.)

Summary: It’s often argued that voting is irrational, because the probability of affecting the outcome is so small. But the outcome itself is extremely large when you consider its impact on other people. I estimate that for most people, voting is worth a charitable donation of somewhere between \$100 and \$1.5 million. For me, the value came out to around \$56,000. So I figure something on the order of \$1000 is a reasonable evaluation (after all, I’m writing this post because the number turned out to be large according to this method, so regression to the mean suggests I err on the conservative side), and that’s big enough to make me do it.

Moreover, in swing states the value is much higher, so taking a 10% chance at convincing a friend in a swing state to vote similarly to you is probably worth thousands of expected donation dollars, too. (This is an important move to consider if you’re in a fairly robustly red-or-blue state like New York, California, or Texas where Gelman et al estimate that “the probability of a decisive vote is closer to 1 in a billion.”) I find EV calculations like this for voting or vote-trading to be much more compelling than the typical attempts to justify voting purely in terms of signal value or the resulting sense of pride in fulfilling a civic duty.

Posted in Altruism, Effectiveness

Since a lot of interest in AI alignment has started to build, I’m getting a lot more emails of the form “Hey, how can I get into this hot new field?”. This is great. In the past I was getting so few messages like this that I could respond to basically all of them with many hours of personal conversation.

But now I can’t respond to everybody anymore, so I have a new plan: leverage academia.

To grossly oversimplify things, here’s the heuristic. Continue reading

Posted in AI Safety, Effectiveness

Please share this if you think anyone you know might be interested.

Sometimes in my research I have to do some task on a computer that I could easily outsource, e.g., adding bibliographical data to a list of papers (i.e., when they were written, who the authors were, etc.). If you think you might be interested in trying some work like this, in exchange for

Sometimes the world needs you to think new thoughts. It’s good to be humble, but having low subjective credence in a conclusion is just one way people implement humility; another way is to feel unentitled to form your own belief in the first place, except by copying an “expert authority”. This is especially bad when there essentially are no experts yet — e.g. regarding the nascent sciences of existential risks — and the world really needs people to just start figuring stuff out. Continue reading

Posted in Altruism, Effectiveness

There are surprisingly many impediments to becoming comfortable making personal use of subjective probabilities, or “credences”: some conceptual, some intuitive, and some social. However, Phillip Tetlock has found that thinking in probabilities is essential to being a Superforcaster, so it is perhaps a skill and tendency worth cultivating on purpose. Continue reading

Posted in Altruism, Effectiveness

10 years after my binary search through dietary supplements, which found that a particular blend of B and C vitamins was particularly energizing for me, a CBC news article reported that the blend I’d used — called “Emergen-C” — did not actually contain all of the vitamin ingredients on its label. Continue reading

Posted in Altruism, Effectiveness, Life

When I was 19 and just beginning my PhD, I found myself with a lot of free time and flexibility in my schedule. Naturally, I decided to figure out which dietary supplements I should take. Continue reading

Posted in Effectiveness, Life

Edit: my employer was eventuslly able to order me an e-ink monitor, so the reward is off 🙂

I would like to write LaTeX on a wireless-enabled e-ink display with a 13″ or larger screen to avoid visual fatigue. If you solve this problem for me, I will pay you a $200 reward, be extremely grateful, and write a blog post explaining your solution so that others might benefit 🙂 Some examples that I would consider solutions: Continue reading

My main bottleneck as a researcher right now is that I have various bureaucracies I need to follow up with on a regular basis, which reduce the number of long interrupted periods I can spend on research. I could really use some help with this. Continue reading

Having a space to write things down frees up your mind — specifically, your executive system — from the task of holding things in working memory, so you can focus your attention on generating new thoughts instead of looping on your most recent ones to keep them alive. Writing down what’s in your head — math, plans, feelings, whatever — can start paying cognitive dividends in about 5 seconds, and can make the difference between a productive thinking day and a lame one. Continue reading

Summary: I think the standardized 8-week MBSR course format is better designed than most introductory meditation practices, and have found David Weinberg in particular to be an excellent mindfulness instructor. Since something like 30 to 100 people have asked me to recommend a way to learn/practice mindfulness, I’m batch-answering with this post. Continue reading

Posted in Effectiveness, Life

Note: I’m writing about this technique to (1) reduce the overhead cost of testing it, and (2) illustrate what I consider good practices for “rolling out” a new technique to be added to a rationality curriculum. Despite seeming super-useful in my first-person perspective, experience says the technique itself probably needs to undergo several tests and revisions before it will actually work as intended, even for most readers of my blog I suspect. Continue reading

Posted in Effectiveness, Life

Summary: Since I offered to answer questions about my pledge to donate 10% of my annual salary to CFAR as an existential risk reduction, the question “Why doesn’t CFAR do something that will scale faster than workshops?” keeps coming up, so I’m answering it here. Continue reading

Posted in Altruism, Effectiveness

Summary: The common attitude that “You think too much” might be better parsed as “You don’t experiment enough.” Once you’ve got an established procedure for living optimally in «setting», be a good scientist and keep trying to falsify your theory when it’s not too costly to do so.

Continue readingPosted in Effectiveness, Life

Summary: Develop an allergy to saying “Will anyone do X?”. Instead query for more specific error signals: Continue reading

Posted in Altruism, Effectiveness

Summary: Because of its plans to increase collaboration and run training/recruiting programs for other groups, CFAR currently looks to me like the most valuable pathway per-dollar-donated for reducing x-risk, followed closely by MIRI, and GPP+80k. As well, MIRI looks like the most valuable place for new researchers (funding permitting; see this post), followed very closely by FHI, and CSER. Continue reading

(Follow-up to Fun does not preclude burnout)

Sometimes I decide to spend a few weeks or months putting some of my social needs on hold in favor of something specific, like a deadline. But after that’s done, and I “have free time” again, I often find myself leaning toward work as a default pass-time. When I ask my intuition “What’s a fun thing to do this weekend?”, I get a resounding “Work!” Continue reading

Posted in Effectiveness, Life

As far as I can tell, I’ve never experienced burnout, but I think that’s only because I notice when I’m getting close. And in recent years, I’ve had a number of friends, especially those interested in Effective Altruism, make the mistake of burning out while having fun. So, I wanted to make a public service announcement: The fact that your work is fun does not mean that you can’t burn out. Continue reading

Posted in Effectiveness, Life

When I realized this principle, I experienced around a 2x or 3x increase in my rate of causing-people-to-do-things-over-email, out of the “usually doesn’t work” range into the “usually works” range. I find myself repeating this advice a lot in an attempt to boost the effectiveness of friends interested in effective altruism and related work, so I’m making a blog post to make it easier. Continue reading

Posted in Effectiveness

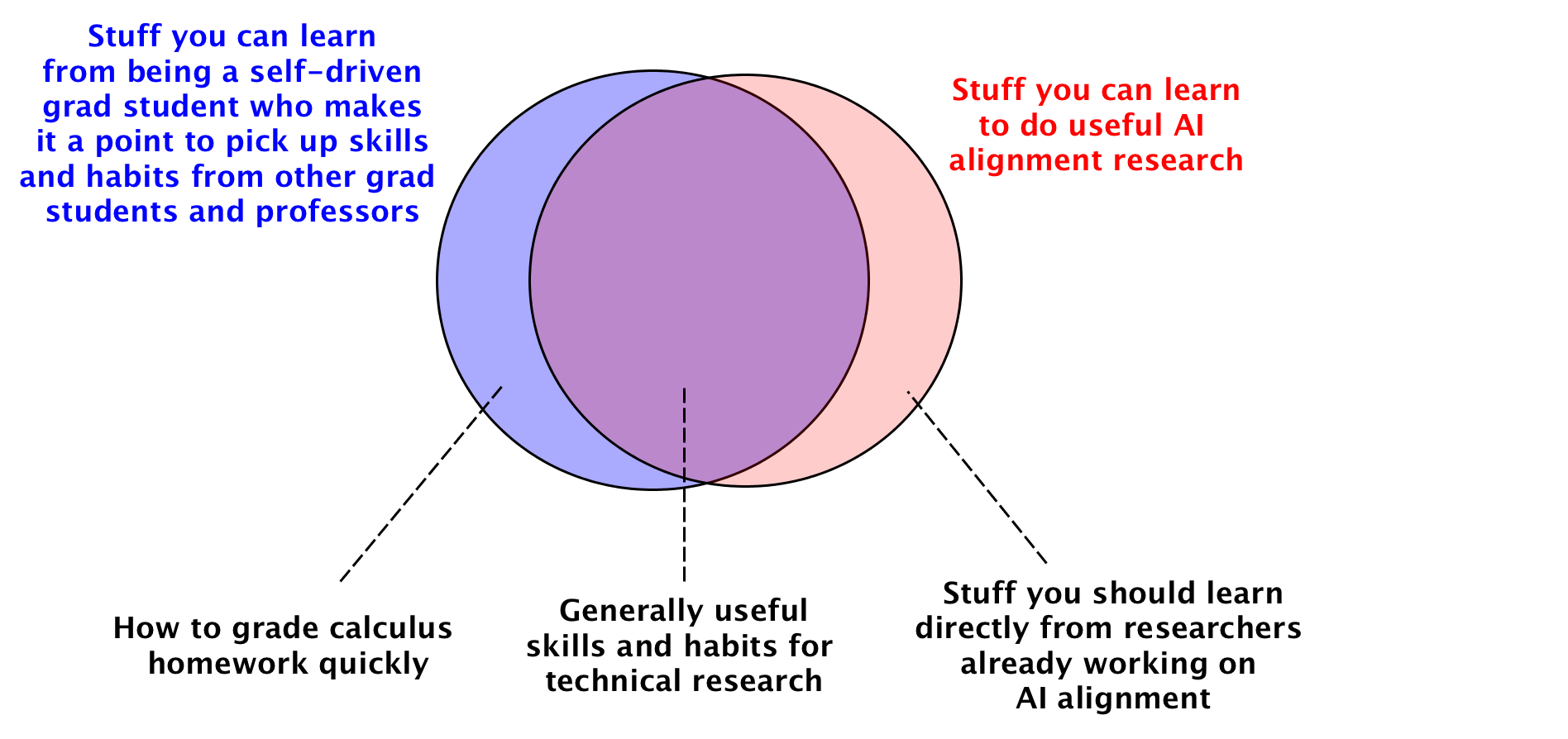

Among my friends interested in rationality, effective altruism, and existential risk reduction, I often hear: “If you want to have a real positive impact on the world, grad school is a waste of time. It’s better to use deliberate practice to learn whatever you need instead of working within the confines of an institution.” Continue reading

Posted in Effectiveness

Comments Off on Willpower Depletion vs Willpower Distraction

Posted in Effectiveness